2023. 4. 5. 10:30ㆍGPT-4 Alternative, Competitor

Vicuna, At a glance

Rapid advances in large language models (LLMs) have revolutionised chatbot systems, resulting in unprecedented intelligence like OpenAI's ChatGPT.

Despite its impressive performance, however, research and open source innovation in the field is hampered by the fact that the details of ChatGPT's training and architecture remain unclear.

Inspired by Meta-LLaMA and the Stanford Alpaca project, Vicuna-13B, an open-source chatbot with an improved dataset and scalable infrastructure.

Open-Source, High Quality and Low Cost

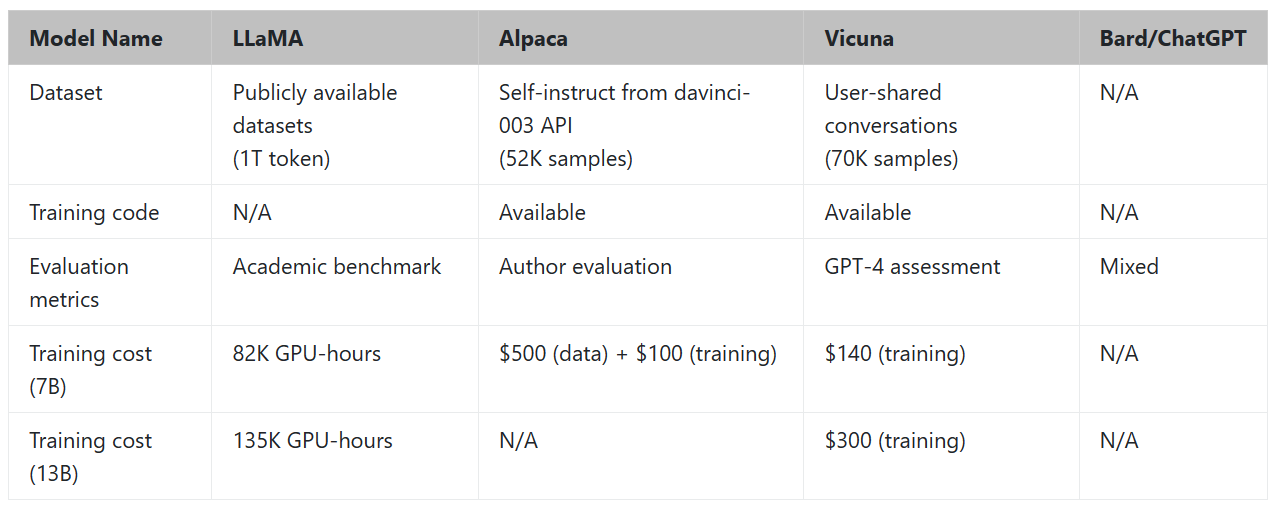

Vicuna-13B has demonstrated competitive performance against other open-source models, such as Stanford Alpaca, by fine-tuning a LLaMA base model on user-shared conversations collected from ShareGPT.

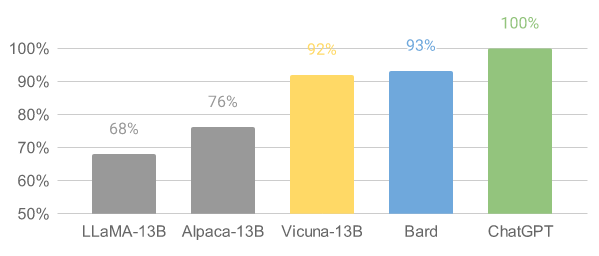

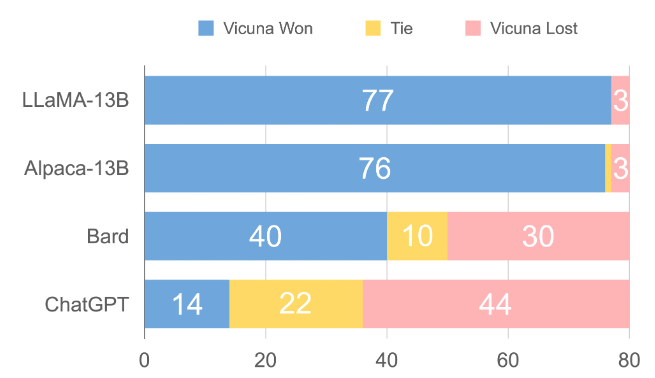

Evaluation using GPT-4 as a judge shows that Vicuna-13B achieves more than 90% of the quality of OpenAI ChatGPT and Google Bard AI, while outperforming other models such as Meta LLaMA (Large Language Model Meta AI) and Stanford Alpaca in more than 90% of cases.

The cost of training Vicuna-13B is approximately $300.

The training and serving code, along with an online demo, are publicly available for non-commercial use.

How to evaluate a chatbot?

The Vicunateam developed eight categories of questions, such as Fermi problems, role-play scenarios, and coding/math tasks, to test different aspects of a chatbot's performance.

Through careful prompt engineering, GPT-4 is able to generate diverse, challenging questions that baseline models struggle with.

The Vicuna team selects ten questions per category and collects responses from five chatbots: LLaMA, Alpaca, ChatGPT, Bard and Vicuna.

GPT-4 is then asked to rate the quality of the responses on the basis of how helpful, relevant, accurate and detailed they are.

Release and Repo

The first release will be the training, serving and evaluation code on a GitHub repo:https://github.com/lm-sys/FastChat.

The Vicuna-13B model weights will also be released, please find the instructions here.

There are no plans to release the dataset.

Discord Server :https://discord.gg/h6kCZb72G7

Twitter :https://twitter.com/lmsysorg

Blog :https://vicuna.lmsys.org/

Github :https://github.com/lm-sys/FastChat

GitHub - lm-sys/FastChat: The release repo for "Vicuna: An Open Chatbot Impressing GPT-4"

The release repo for "Vicuna: An Open Chatbot Impressing GPT-4" - GitHub - lm-sys/FastChat: The release repo for "Vicuna: An Open Chatbot Impressing GPT-4"

github.com

Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality

by the Team with members from UC Berkeley, CMU, Stanford, and UC San Diego

vicuna.lmsys.org

lmsys.org (@lmsysorg) / 트위터

Large model system organization. We created Vicuna!

twitter.com

Join the LMSys Community Discord Server!

Check out the LMSys Community community on Discord - hang out with 2,120 other members and enjoy free voice and text chat.

discord.com

'GPT-4 Alternative, Competitor' 카테고리의 다른 글

| MedAlpaca 의학, 의료전문 chatGPT의 등장 (0) | 2023.04.19 |

|---|---|

| Cerebras releases open source ChatGPT-like alternative models (0) | 2023.03.29 |

| Quora는 GPT-4의 경쟁자인 클로드(Claude)를 Poe에 유료 서비스와 함께 제공합니다. (0) | 2023.03.16 |