2023. 3. 29. 23:10ㆍGPT-4 Alternative, Competitor

Artificial intelligence chip startup Cerebras releases open source ChatGPT-like models on 2023/03/28 Tuesday said it has released open source ChatGPT-like models for the research and business community to use for free in an effort to foster more collaboration.

Cerebras released seven models

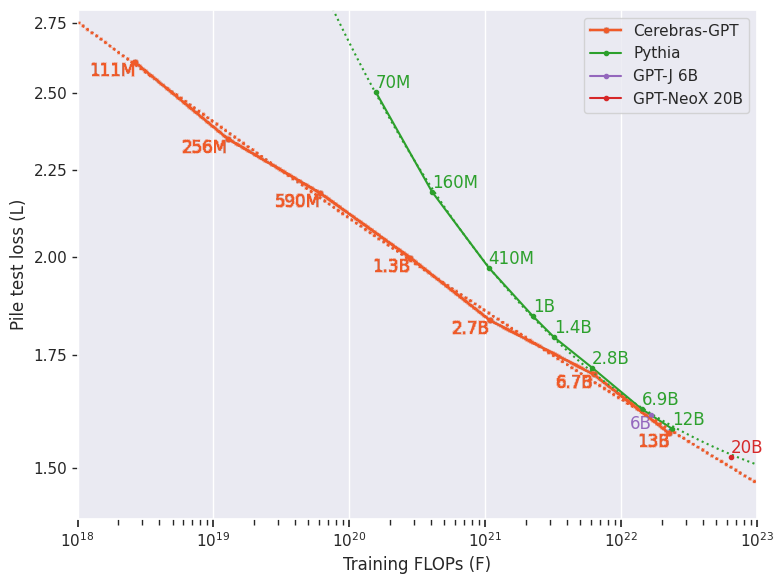

Cerebras released seven models, all trained on its Andromeda Artificial intelligence supercomputer, including smaller language models with 111 million parameters to a larger model with 13 billion parameters.

First Open source chatGPT / GPT alternative

These models are the first family of GPT models released via the Apache 2.0 open source license.

Models with more parameters are able to perform more complex generative functions.

For example, OpenAI's ChatGPT GPT-3.x, 4.x chatbot has 175 billion parameters and is able to generate poetry and research, which has helped attract a lot of interest and funding to AI more broadly.

Model Comparison Table

Cerebras AI Hardware and Scaling Technique

Cerebras CEO said his largest model took just over a week to train, work that can typically take several months, thanks to the architecture of the Cerebras system, which includes a chip the size of a dinner plate designed for Artificial intelligence training.

Meta’s OPT model, ranging from 125M to 175B parameters was trained on 992 nVidia A100 GPUs using and Eleuther’s 20B parameter GPT-NeoX used a combination data, tensor, and pipeline parallelism to train the model across 96 nVidia A100 GPUs.

Most AI models today are trained on Nvidia GPUs, but more and more startups like Cerebras are trying to grab a share of the market.

for more information for Cerebras

Cerebras-GPT: A Family of Open, Compute-efficient, Large Language Models - Cerebras

Cerebras-GPT: A Family of Open, Compute-efficient, Large Language Models - Cerebras

Cerebras open sources seven GPT-3 models from 111 million to 13 billion parameters. Trained using the Chinchilla formula, these models set new benchmarks for accuracy and compute efficiency.

www.cerebras.net

cerebras (Cerebras) (huggingface.co)

cerebras (Cerebras)

Cerebras is the inventor of the wafer scale engine – a single chip that packs the compute performance of a GPU cluster. Cerebras CS-2 systems are designed to train large language models upward of 1 trillion parameters using only data parallelism. This i

huggingface.co

'GPT-4 Alternative, Competitor' 카테고리의 다른 글

| MedAlpaca 의학, 의료전문 chatGPT의 등장 (0) | 2023.04.19 |

|---|---|

| Vicuna : an open source chatbot impresses GPT-4 with 90% of the quality of ChatGPT (0) | 2023.04.05 |

| Quora는 GPT-4의 경쟁자인 클로드(Claude)를 Poe에 유료 서비스와 함께 제공합니다. (0) | 2023.03.16 |